First off, no one should be taking health advice or trust news from an Instagram posting or a Twitter or Facebook post – especially if you don’t know that person personally! But many people are doing just that and are likely being manipulated by online “bots,” a shorter form of “robots,” social media accounts that are not coming from a human, but are programmed by humans to sound like a person.

When Jon-Patrick Allem, PhD, a researcher at the Keck School of Medicine, first decided to study bots on social media in 2015, funding was hard to come by. He says funders didn’t think bots really existed or, if they did, had no impact on human behavior.

Around that time, several research teams were using social media to study people’s attitudes about health behaviors like smoking and getting vaccinated. His goal in observing the bots was to understand whether bots were undermining the quality of this research.

Allem explains that if posts from bots are counted in the data, a scientist might get a very inaccurate picture of people’s actual views. “If you’re a researcher, and you think there’s value in social media data to understand some phenomena that you’re interested in, and that phenomena is tied to the public or human attitudes and behaviors, you really want to ensure that the data you’re collecting is as representative of your population of interest as possible,” he says.

A few months later during the 2016 presidential campaign, social bots took the spotlight. Researchers had found evidence of coordinated attempts to spread disinformation using bots to amplify divisive or inaccurate messages to reach real people. While many people focused on the social media bots sowing political discord, Allem wondered if social media bots could be sending confusing messages about health as well. He shifted his research to focus on how these posts might affect people’s behavior offline.

The idea that bots may be sharing misinformation about health has taken on new urgency during the pandemic. Since COVID-19 emerged, researchers have started wondering about whether information shared by bots is influencing people’s behaviors – like altering the likelihood that they’ll get a vaccine, avoiding crowds or reaching for supplements that promise to boost immunity.

Some researchers believe half of all tweets with the COVID19 hashtag have come from bots. Social media bots tweet about all kinds of health topics, not just COVID-19. It’s not always clear what their programmers’ motivations are. So how can you tell if the posts you’re seeing on social media come from bots or real people?

What Is a Bot?

A bot is a social media account controlled by some type of software or code to interact in specific ways. “They can be useful or humorous, whatever the case is,” says Allem. Some bots work as news aggregators, retweeting or sharing articles associated with specific topics or hashtags. They aren’t inherently bad. For example, a neighborhood health clinic could use marketing software to post daily public service announcements reminding you to wash your hands, an example cited by The New York Times.

Some bots are very basic. Companies or people use software to program an account to regularly post items of interest. Others can use artificial intelligence and engage directly with other users, learning to better mimic human posts as they do so.

What Kinds of Information Do Bots Share?

The US Department of Homeland Security (USDHS) has published an infographic describing some of the functions of social bots, but offered no information specific to those that post about health-related behaviors. The infographic explains that bots can be programmed to function as customer service representatives or to promote product sales. They can produce and amplify hate speech and harassment. They can encourage civic engagement. They can make certain people or products seem more popular than they are.

The same year that the USDHS published its infographic, a study in the American Journal of Public Health showed that bots frequently shared anti-vaccine posts about all vaccinations. The study cited previous work that demonstrated that these posts have an impact: those who see them are more likely to delay vaccinations.

Many times, the purpose of a bot isn’t obvious. Allem explains some of his biggest surprises working in the field are just the types of information that bots promote. “Some of it has clear implications, let’s say, for financial gain. If you’re selling a product or service and you want to amplify the message using this approach that kind of makes sense—well, it makes sense if you’re comfortable deceiving your customers,” he says. “But some of these claims we’ve come across, I don’t see who could benefit from it. It just seems to be completely irrational. Just making up claims and polluting topics of conversation for the sake of polluting it.”

One example, he says, is that many bots have been programmed to write posts that tell readers that spending time in a sauna will help them quit smoking. “We’ve seen a ton of messages promoting that one act,” he says. “I’m assuming the average person couldn’t name one sauna company,” which suggests that these messages aren’t increasing sauna sales.

How Do You Tell if a Post Is From a Bot?

In short, it’s not easy. With more and more sophisticated programming, bots are getting harder and harder to spot. And, Allem says, even if an account displays automated activity, the person who programmed it can pause that activity, and post or respond to messages genuinely, making it even harder to definitively say whether an account is a bot.

The Massachusetts Institute of Technology’s Tech Review has offered some tips. A profile of a person who offers very limited information or that has a generic profile photo might be a bot. If an account constantly tweets about the same topic, or tweets far more often than an average person or at odd times – such as throughout both the day and night — it might be a bot. A study published in April 2020 suggested that there are some notable differences in the behaviors of Twitter bots and accounts managed by real people, but that even throughout the course of the study, bots gradually became more human-like in their posting.

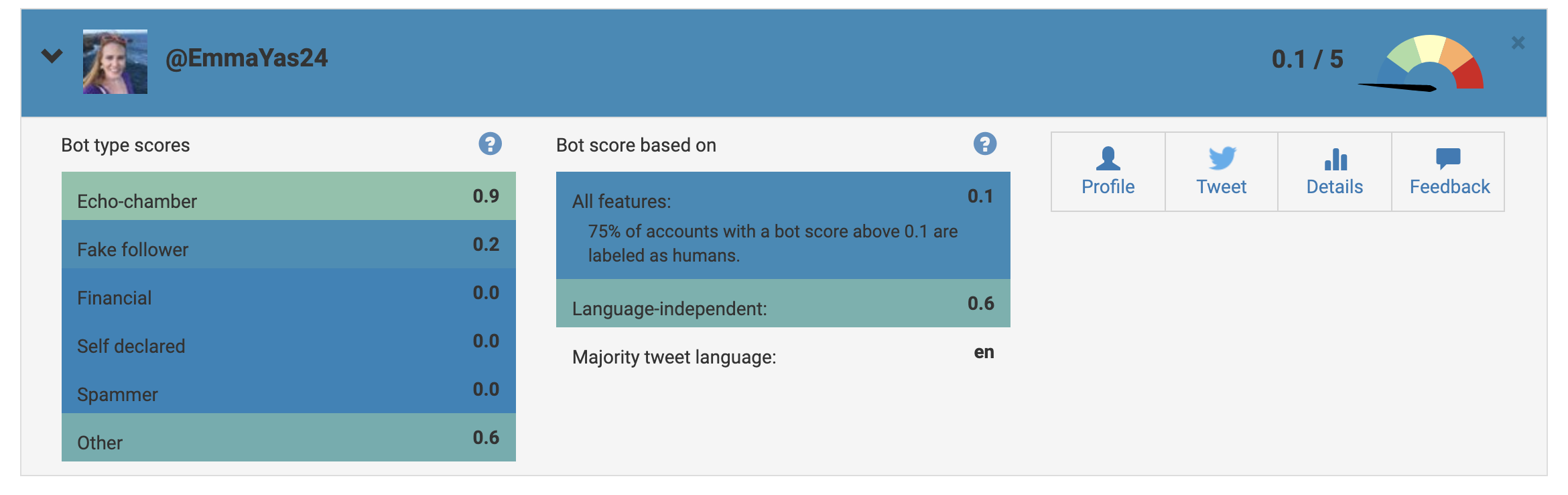

The paper cited a tool, called BotOMeter, developed by researchers at the University of Indiana, that analyzes twitter activity of accounts, and rates the likelihood that they are managed by software. As you can see according to Botometer, my own Twitter account is decidedly-unrobotic.

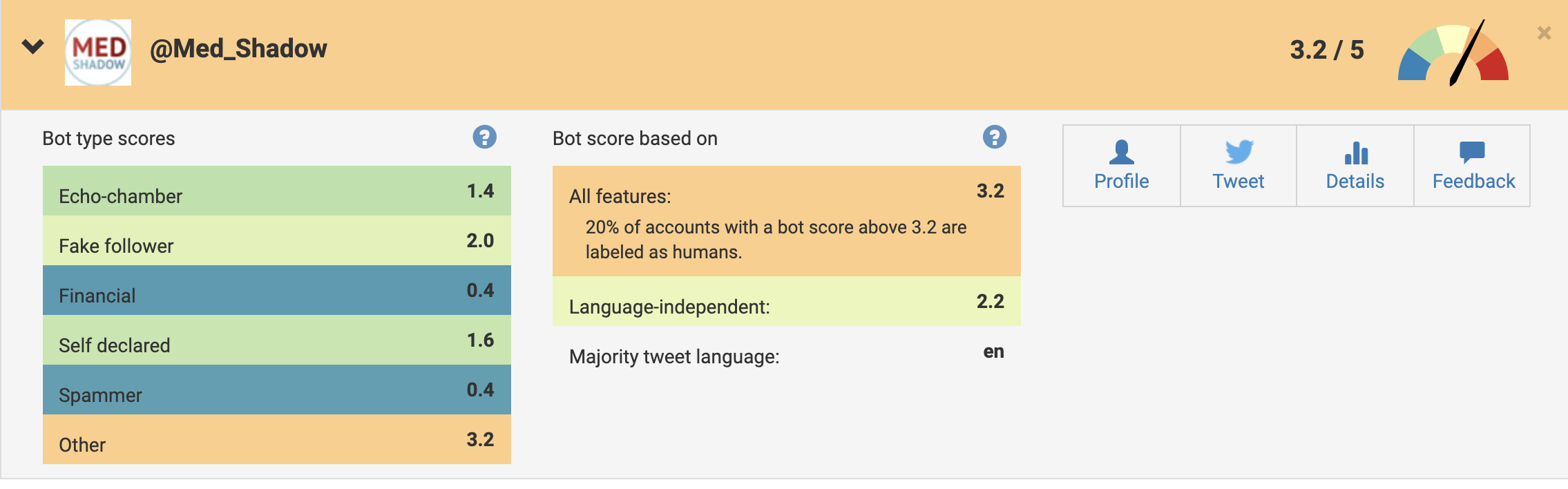

The official MedShadow Foundation account (@Med_Shadow), however, which is run by a human social media manager with help from tools that allow her to schedule posts in advance, gets ranked as slightly more bot-like.

The official MedShadow Foundation account (@Med_Shadow), however, which is run by a human social media manager with help from tools that allow her to schedule posts in advance, gets ranked as slightly more bot-like.

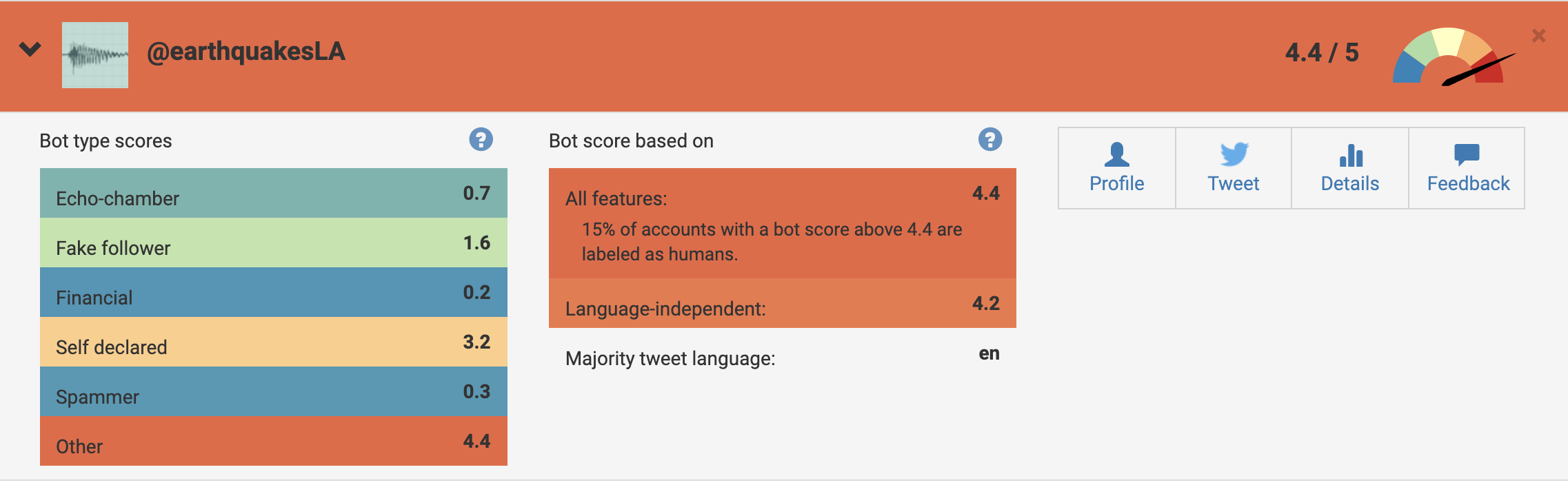

And @EarthquakesLA, a self-declared bot (its Twitter bio reads: I am a robot that tells you about earthquakes in Los Angeles as they happen. Built by @billsnitzher. Data is from the USGS. Get prepared) that tweets information about Earthquakes in Los Angeles, was ranked as highly likely (4.4 out of 5) to be a bot. Remember, not all bots are nefarious.

There’s no one definitive way for the average person to identify a bot. That’s why Allem says “health literacy and media literacy [are] incredibly important. Differentiating between primary sources and sources that are highly dubious is an important task.”